Automotive Prototype Vehicles

Electric Vehicle Concept Car

Background

Icoteq was approached to develop the technology and supply all the electrics, electronics and software for a one-off driverless electric concept car build.

The vehicle requirements were:

- Fully electric battery powered vehicle

- Wireless remote control from an Android tablet to provide the simulation of an autonomous vehicle (no in car steering, pedals or drive controls)

- Standard lighting features (e.g., main beam, fog, brake, DRL, CHMSL, front/rear indicators) and numerous bespoke interior and exterior lighting features

- Motors, actuators and control systems for:

- Main drive, steering and braking with a maximum speed of 15 km/hr

- Parking brake engage/release

- Doors based on gull-wing and sliding sections

- Rear boot/trunk gas struts

- Boot/trunk and door latch/release

- Bespoke adjustable seating (recline, upright) and also full seat rotation to improve accessibility during ingress/egress

- Centre console assembly movement

- In-car infotainment system including

- A curved 4K HD display

- A sound system

- A centre console unit housing with

- User touchscreen for accessing comfort controls (climate, seating, sound levels)

- Two wireless charging points

- A bespoke bezel controller with built in display allowing the user to interact with the infotainment system

- A gesture activated volume control

- Air conditioning system

- Battery charging solution

- Interior camera for delivering a passenger live-feed

- 3G/4G/WiFi connectivity with the ability to run cloud-content on the infotainment system

The vehicle was required to be built from scratch and delivered for a showcase within a 6 month time frame.

All aspects of the aesthetic design were driven by the end-customer’s in-house design team which led to many new requirements evolving as the project progressed.

Software

Icoteq’s aim from the outset was to develop >95% of the system software in Python to run on Linux. A further goal was to be able to containerize the entire software system into a single Docker image that could be deployed and executed on any computer. This would allow simulation of all system functions, API testing and integration with a 3rd party cloud backend system.

The software encompassed:

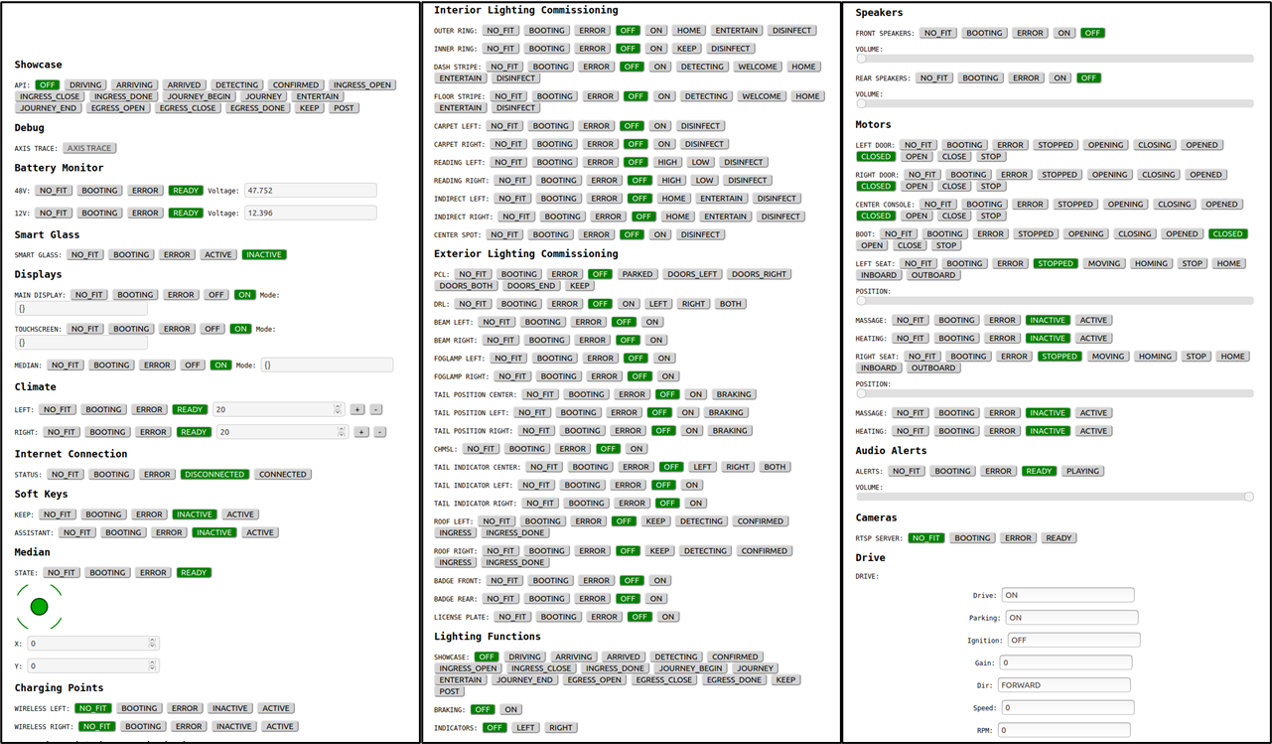

- Web-based user interfaces for diagnostics and motor control (see below)

- Web-based animations for the touchscreen display

- Web-based content deployed from the cloud to be rendered on the infotainment display

- Emulations of in-car control devices (eg push buttons, centre console bezel)

Frameworks

To this end, at a very early stage in the design, we evaluated and adopted two software frameworks to underpin the main development:

- The CANopen software stack was based on the open source library https://github.com/christiansandberg/canopen ; this simplified the task of defining our CAN bus messaging interfaces via so-called EDS files. It also allowed us to easily support time synchronization across different compute nodes in the vehicle, particularly for some of the lighting features.

- Python websockets were used for talking to system-wide endpoints using a common websocket API

API

A JSON websocket API was designed and developed that supported both asynchronous events and remote procedure calls to be initiated. The API acted upon resources in a well-defined system-wide resource tree that represented the different functions of the vehicle i.e., seating, doors, drive system, lighting, main display, touchscreen, etc). Whilst the API websocket endpoint was centralized, owing to the distributed nature of the computing nodes over CAN bus, the API service also supported request routing over CANopen.

Through the API, all system-wide resources were “discoverable” with periodic CAN bus heartbeats allowing their status to be quickly ascertained and state changes to be notified to client subscribers via the websocket.

This approach also meant that different compute tasks could be seamlessly re-located from one compute node to another, if necessary, even at run-time without rebooting any of the compute nodes.

Video streaming

We developed a custom GStreamer pipeline to live stream on-demand a 4K RTP stream over the WiFi network from the Mini PC NUC connected to the 4K USB camera. The RTP server supported multiple client RTP connections allowing the in-vehicle experience of passengers to be shown real-time on a large 4K display as part of the showcase as well as on mobile devices.

Compute platforms

We decided upon a homogenous modular approach principally based around the Raspberry Pi 3 & 4 with CAN bus connectivity allowing the compute nodes to be easily distributed.

CAN bus connectivity was achieved using the Seed CAN Raspberry Pi shield. With over a dozen different compute nodes situated around the vehicle, it was important to have a scalable and robust communications network for controlling the nodes.

Because of the intensive processing requirements anticipated for the infotainment system (i.e., based on HTML5 running inside Chromium) we additionally incorporated a high-performance Intel i5 8th generation Mini PC Desktop Computer running Ubuntu Linux.

Connectivity

The vehicle external connectivity was provided using a DrayTek Vigor 3G/4G/WiFi router. The requirement for external connectivity was necessary to allow the client’s cloud-based software to communicate with the vehicle to trigger infotainment visuals and sounds that were executing inside a Chromium browser on the Mini PC NUC. This also had the benefit of allowing remote VPN access into the vehicle to support troubleshooting and allow software updates to be done.

For security and access control, we used a separate Linux VPN server.

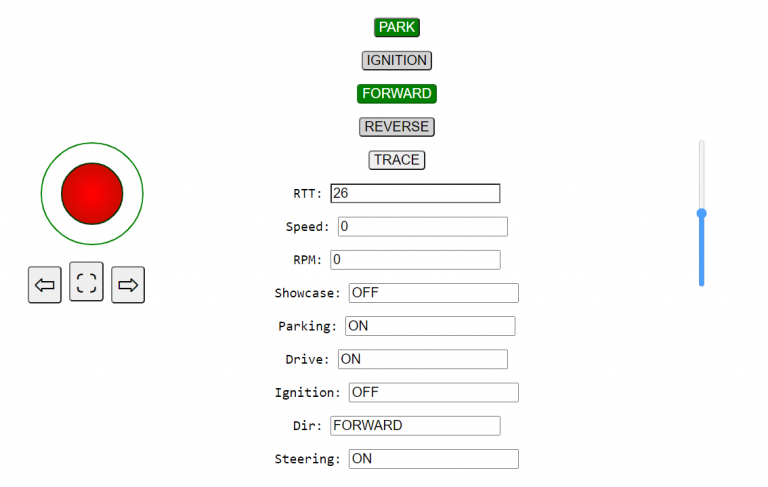

Remote Control

To support a good operating range and high reliability, Icoteq initially recommended the use of an off-the-shelf RC controller for remotely controlling the vehicle steering, braking and speed.

However, to allow support for more extensive interactions with the vehicle operator we opted for an Android tablet which would provide more control channels and be more intuitive than using an RC controller.

To this end, Icoteq developed a websocket based HTML5 touchscreen based application for a standard Android tablet that ran over WiFi to control the vehicle. Intuitive user controls, such as a virtual joystick, were realised using the touchscreen.

The constraint of using WiFi, however, reduced the effective operating range of the controller to 15-20 m in typical radio conditions and a number of safety features were built-in to both the client application and the vehicle motor control software to bring the vehicle to a safe and controlled stop in the event of a communications failure or wireless network outage.

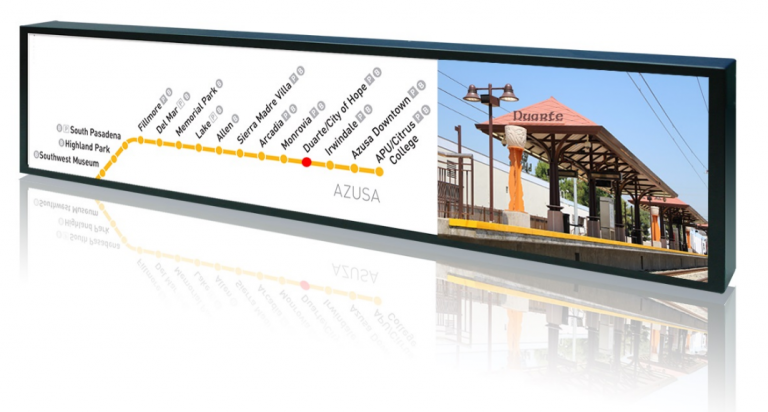

Main Display

The requirements for the main display were very challenging as they needed a 1/3 height 4K display with 3840x720 effective resolution which also needed to be curved.

Icoteq took a novel approach to this requirement by taking an off the shelf 4k signage display and disassembling it to access the display elements. We were then able to carefully bend these elements to the required curvature and mount in a purpose-built housing.

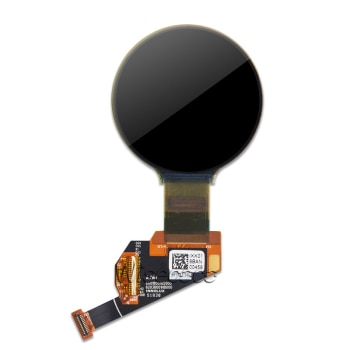

Bezel Display

The end client requested the possibility to add a small circular display into the bezel controller. We were able to find a flexible 1.39” AMOLED display used in smart watches supplied by Wisecoco. Additionally, a HDMI to MIPI driver board was sourced to allow integration with an existing Raspberry Pi4 compute platform we had allocated for the bezel display.

Touchscreen Display

We selected the Tianma 7” 1280 x 800 touchscreen display as it performed well under bright lighting conditions with consistent, wide viewing angle. Additionally a HDMI to MIPI driver board was sourced to allow integration with an existing Raspberry Pi4 compute platform we had allocated for the touchscreen display.

Motors

Steering

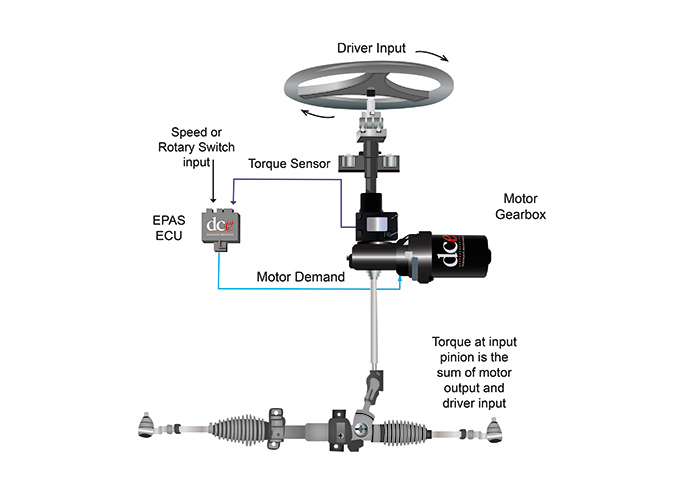

The steering motor selected was the EPAS100A control and drive motor system from DCE Motorsport. It runs off a dedicated 12V battery and has the advantage of being usable for either a power-assisted steering solution or (as in our case) a fully autonomous steering unit.

The steering angle “demand” can be fed over CAN bus directly into the ECU thus the only requirement is to set the maximum torque/speed and angle limits of the steering during installation.

Drive motor

The drive motor selected was the AC-34 AC motor supplied by EV West using a Curtis 1238-7601 controller. The motor can draw up peaks of up to 550A so this imposed some fairly stringent requirements on the power source for the vehicle.

EV West supply a default firmware image for the Curtis controller with a simple CAN bus interface allowing the current RPM of the motor to be monitored in real-time. The motor speed is then controlled through a potentiometer input into which we generated a signal from a digital PWM on one of the compute nodes.

Brushed DC motors & linear actuators

A range of different brushed DC motors and linear actuators were used in the vehicle with different torque / size requirements for:

- Braking

- Rear boot struts

- Doors

- Seating

Icoteq selected a common H-Bridge platform based on the Pololu 24V SMC G2 brushed DC motor controllers. These have the advantage of being small/lightweight and easily commissioned over USB and controllable via a simple UART protocol to set the demand speed.

Motor control

The motor control was based on bespoke software developed by Icoteq entirely in Python for all the motor subsystems. A common CANopen interface was designed to accommodate all the main types of motor axes that were anticipated to be controlled:

- DC brushed motors (SMC UART interface)

- EPAS100A Steering Motor (CAN)

- AC-34 Main drive motor (PWM)

The motor control software supports co-ordinated multiple axis control with limit switch inputs and optional potentiometer feedback to allow for precise position-based demand as well as speed demand.

The motor control software also supports real-time monitoring of all motor axis over WiFi to know their demand speed and position at any time.

Lighting

The client’s goals for the lighting required not only static lighting features (eg standard exterior lighting, interior mood lighting), but also basic animations (eg indicator sweeping effects) and a number of more sophisticated lighting animations (e.g., interactive cyclic sweeping effects that respond to the environment) that would need to run seamlessly across different physical modules.

Icoteq evaluated off-the-shelf RGB LED lighting controllers but found these to be too bulky to install, requiring specialist software and tools to drive and difficult to integrate into the broader system-wide control scheme we had adopted.

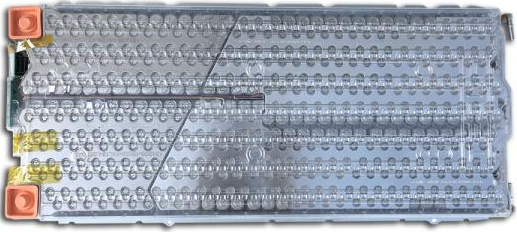

To offer maximum flexibility and seamless integration, Icoteq developed their own LED lighting controller software framework running on Raspberry Pis. Since the Raspberry Pi has reasonable IO support for driving programmable LED strips, we could drive up to 4 parallel chains of LEDs per Raspberry Pi using only a single CPU core on each Raspberry Pi allowing other workloads to be carried out in parallel where necessary.

The software framework supported driving both real-hardware RGB LEDs and also an emulation layer developed using a graphics library to simulate individual LEDs, their orientation/location, colour output, diffusion effects. This was especially useful for debugging animation sequences and also for sharing the proposed animation sequences with the end-client.

Power

The power requirements were driven by the need to be able to run the vehicle for up to 4 hours per day, charging between shows and overnight.

The power was delivered using 2 x Tesla Model S 48V battery packs which are capable of delivering peak currents in excess of 550A as required by the drive motors.

The battery management system was based on the Dilithium Designs BMS Controller and the charging system was based on the Thunderstruck motors TSM2500

Conclusion

This project was complex and challenging and required Icoteq to draw on an extremely wide range of its skills including, but not limited to:

- System design

- Distributed network computing

- Embedded software development

- Electrical engineering

- Electronic engineering

- Motor control

- Mobile application development

- Mathematical modelling and physics

- Display and image processing and control including touch interfaces

If you have your own automotive development requirements please get in touch with Icoteq and find out how we could help you in your next development.